How we’re helping social media companies remove harmful content and protect their users

*Trigger warning: this article includes descriptions of harmful content relating to mental health issues, including but not limited to suicide, self-harm and eating disorders*

Social media platforms like Facebook and Instagram are coming under increasing pressure to protect their users from harmful content. Dr Ysabel Gerrard is working with them to tackle important questions about their algorithms, content moderation processes and ultimately their ability to safeguard users.

Social media has revolutionised how we communicate and interact with our world. Over three billion of us use it to connect with friends, document our lives and learn what’s going on in the world around us. In the early years, platforms used the simple process of likes and shares to keep us coming back for more. But, over time, they’ve developed sophisticated algorithms to feed us more of the content they’ve learned we want to see.

Their algorithms alone can’t distinguish between what we want to see and what’s suitable for us to see. This leads to darker aspects of social media pervading lives. Images of self-harm, eating disorders and suicide can pollute some people’s feeds on a daily basis and could have drastic, sometimes fatal, impacts on our mental health.

Dr Ysabel Gerrard is working with Facebook and Instagram to help them understand what kinds of content might be harmful and to make changes to their platforms, hoping to prevent users from seeing troubling posts.

Ysabel is a Lecturer in Digital Media and Society at the University of ∫¨–fl≤›¥´√Ω. She also sits on the Facebook and Instagram Suicide and Self-Injury (SSI) Advisory Board. Ysabel specialises in understanding how social media content moderation works and how it could be regulated. Social media content moderation controls what we see and what gets banished to hidden parts of the platform, never removed but never viewed.

The challenge to prevent users from being exposed to harmful content has two key aspects. The first is knowing how content moderation works, allowing for the removal of content that is deemed problematic. The second is understanding how social media algorithms deliver content to users.

Social media platforms are proven to be highly positive, supportive spaces for lots of people, especially those with stigmatised health conditions or identities. But potentially harmful content sadly still exists across platforms and it’s important that we regulate them properly.

In particular, it’s important that we pay attention to platforms’ recommendation algorithms (the process of showing users more content they might want to see). People who are already viewing content about self-harm, eating disorders and suicide are likely to get it recommended back to them, and we don’t know enough about the role this algorithmic process might play in their mental ill health.

Dr Ysabel Gerrard

University of ∫¨–fl≤›¥´√Ω and Facebook and Instagram Suicide and Self-Injury (SSI) Advisory Board

Algorithms are the foundations of social media platforms. They gather information about our likes and dislikes and use it to keep our interest, by showing us the content it determines we want to see. If that content is benign, like news about a favourite sports team or pictures of the top new travel destination, no harm is likely to occur. But if users have sought out content about mental illness or self harm in the past, it may snowball into an avalanche of graphic and unsettling content.

Platforms have measures in place to prevent users from discovering this content in the first place. Prohibiting certain hashtags and manually purging the most harmful content are just some of the ways social media companies attempt to safeguard their users.

But this doesn't always work. Sometimes, harmful posts slip through the net.

‘Instagram and lots of other social media platforms ban people from searching for terms related to the promotion of eating disorders. But , for example, slightly mis-spelling certain words,’ explains Ysabel. The impact of this content can be devastating.

A fundamental problem facing social media companies is deciding what content should be considered harmful in the first place. This is where Ysabel plays an important role.

Ysabel understands content moderation, but she also has a deep understanding of mental health, particularly how people have long used the internet to talk about eating disorders. For the likes of Facebook and Instagram she couldn’t be better placed to help them navigate these dilemmas. This is why she was asked to be part of the Facebook and Instagram Suicide and Self-injury Advisory Board.

is a group of experts with mixed occupations, nationalities and specialisms, who come together to discuss the regulations and policies around social media content. Some decisions they make are straightforward. Content that’s obviously harmful, like anything which promotes the worsening of an eating disorder, will be removed.

But some content is much harder to moderate.

What we do with images of, for example, self-harm scars or suicide notes have to be carefully considered. We don’t want to hide content like this, but we also want to make sure we don’t expose people to potentially triggering content, which means some of our decisions are really tough to make.

Dr Ysabel Gerrard

University of ∫¨–fl≤›¥´√Ω and Facebook and Instagram Suicide and Self-Injury (SSI) Advisory Board

What about images that contain graphic content, but show someone’s journey to a better state of mental health? For example, people sharing their journey to a healthy body weight while struggling with anorexia could encourage others suffering from the illness to seek help or provide inspiration for people who are on the same path.

But images of rib cages and other protruding bones can be trigger images for people who struggle with their body image. In May 2019, the Mental Health Foundation found that one in eight adults had considered taking their lives, because of concerns related to body image.

"The UK’s eating disorder charity Beat explains that people suffering with or recovering from an eating disorder can feel ‘triggered’ by ‘’. People’s triggers vary greatly and they aren’t always triggered by obviously harmful content on social media. This is why it’s difficult to make social media a fully ‘safe’ place for people with eating disorders and other mental health conditions," explains Ysabel.

Ysabel’s contributions to the advisory board are therefore crucial to navigating the nuances each circumstance presents.

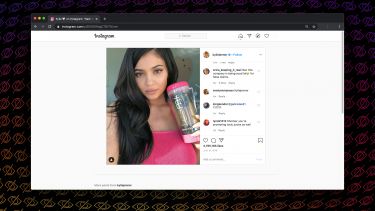

However, it’s not just these types of images which can influence our mental health. Celebrity endorsements also play a key role. Brands work with celebrities and influencers to gain access to their vast numbers of fans and followers. This means posts about new diet teas and appetite-suppressant lollipops can quickly be distributed into millions of impressionable users' feeds at an alarming scale and speed.

“Diet products that make miracle claims about their ability to help someone lose weight demonstrate unethical advertising standards for a start, but there’s also a risk that such products might be used by people with eating disorders. For example, some miracle diet products have laxative or diuretic qualities and we have a lot of evidence that people with bulimia often to fuel weight loss,” says Ysabel.

But Facebook and subsequently Instagram have made a change after seeking guidance from external experts like Ysabel.

“The advisory board and I spoke at length about this content. We discussed not only the link between weight loss products and eating disorders, but bigger cultural issues around Instagram and body image. After consulting with me and with various charities and healthcare organisations, Instagram decided to roll out it’s new policy,” says Ysabel.

As of the 18 September 2019, Instagram began enforcing its new policy focussing on the abundance of weight-loss products and attached miracle cures. The new rules prevent users under 18-years from viewing the weight-loss items and an outright ban on any content that makes a ‘miraculous claim’.

The challenge of protecting users from harmful content isn’t easy. The sheer volume of content posted on a daily basis makes this hard enough. But it requires a deeper understanding of mental health and how different types of content can cause harm. The guidance and knowledge of experts like Ysabel is crucial to helping platforms implement the best possible approaches to content moderation and ultimately to safeguard us from content that can cause us harm.

If you or someone you care about has been affected by this article or any of the issues in it, you can find a list of mental health hotlines .

By Alicia Shephard, Research Marketing and Content Coordinator

Further information

Research publications

Media